Kafka消息过长详解

Kafka发送消息大小问题

⚠️ 本文实验的Kafka版本为2.11版本.java

消息概述

kafka中的消息指的就是一条ProducerRecord,里面除了携带发送的数据以外,还包含:apache

- topic 发往的Topic

- partition 发往的分区

- headers 头信息

- key 数据

- value 数据

- timestamp-long 时间戳

Producer生产消息过长

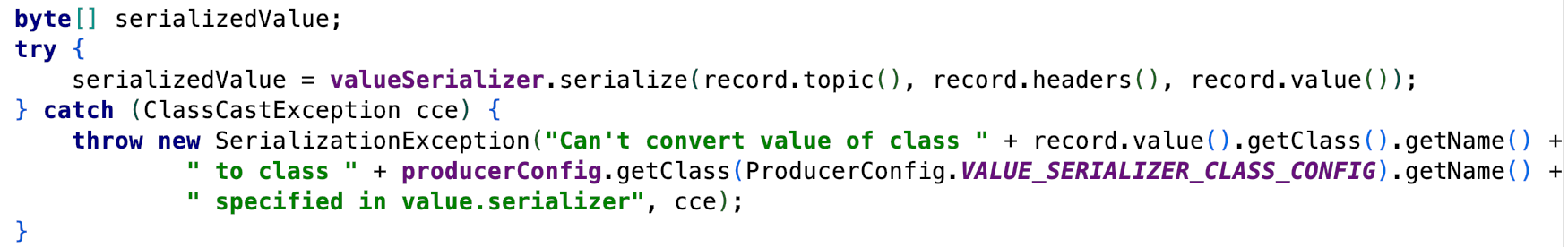

在生产者发送消息的时候,并非上面全部的信息都算在发送的消息大小.详情见下面代码. 数组

数组

上面的代码会将value序列化成字节数组,参与序列化的有topic,headers,key. 用来验证value是否超出长度的是ensureValidRecordSize(serializedSize);方法.并发

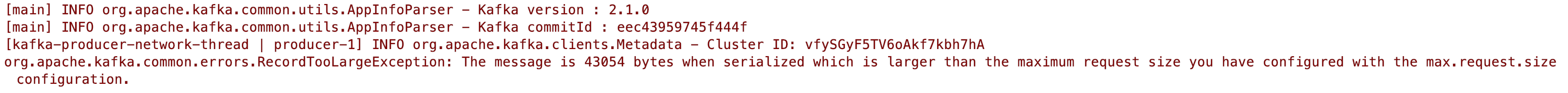

ensureValidRecordSize从两个方面验证,一个是maxRequestSize(max.request.size),另外一个是totalMemorySize(buffer.memory), 只有当value的长度同时小于时,消息才能够正常发送.less

private void ensureValidRecordSize(int size) {

if (size > this.maxRequestSize)

throw new RecordTooLargeException("The message is " + size +

" bytes when serialized which is larger than the maximum request size you have configured with the " +

ProducerConfig.MAX_REQUEST_SIZE_CONFIG +

" configuration.");

if (size > this.totalMemorySize)

throw new RecordTooLargeException("The message is " + size +

" bytes when serialized which is larger than the total memory buffer you have configured with the " +

ProducerConfig.BUFFER_MEMORY_CONFIG +

" configuration.");

}

单条消息过长或产生以下错误. 异步

异步

这里有个注意的点,若是只是单纯的发送消息,没有用Callback进行监控或者用Future进行得到结果,在消息过长的状况下,不会主动发出提示,ide

使用Future接收结果

Future<RecordMetadata> send = kafkaProducer.send(new ProducerRecord<>("topic", "key", "value"));

RecordMetadata recordMetadata = send.get();

System.out.println(recordMetadata);

Future类中get()方法, @throws ExecutionException 若是计算抛出异常,该方法将会抛出该异常.fetch

/** * Waits if necessary for the computation to complete, and then * retrieves its result. * * @return the computed result * @throws CancellationException if the computation was cancelled * @throws ExecutionException if the computation threw an * exception * @throws InterruptedException if the current thread was interrupted * while waiting */ V get() throws InterruptedException, ExecutionException;

使用Callback进行监控

先看Kafka专门为回调写的接口.this

// 英文注释省略,总的来讲: 用于异步回调,当消息发送server已经被确认以后,就会调用该方法 // 该方法中的确定有一个参数不为null,若是没有异常产生,则metadata有数据,若是有异常则相反 public void onCompletion(RecordMetadata metadata, Exception exception);

kafkaProducer.send(new ProducerRecord<>("topic", "key", "value"), new Callback() {

@Override

public void onCompletion(RecordMetadata metadata, Exception exception) {

if (exception != null) {

exception.printStackTrace();

}

}

});

日志Level=DEBUG

将日志的消息级别设置为DEBUG,也会给标准输出输出该警告信息.spa

Future和Callback总结

经过上面两种比较,不难发现Future是Java并发标准库中,并非专门为kafka而设计,须要显示捕获异常,而Callback接口是kafka提供标准回调措施,因此应尽量采用后者.

服务端接收消息限制

在生产者有一个限制消息的参数,而在服务端也有限制消息的参数,该参数就是message.max.bytes,默认为1000012B (大约1MB),服务端能够接收不到1MB的数据.(在新客户端producer,消息老是通过分批group into batch的数据,详情见RecordBatch接口).

/**

* A record batch is a container for records. In old versions of the record format (versions 0 and 1),

* a batch consisted always of a single record if no compression was enabled, but could contain

* many records otherwise. Newer versions (magic versions 2 and above) will generally contain many records

* regardless of compression.

* 在旧版本不开启消息压缩的状况下,一个batch只包含一条数据

* 在新版本中老是会包含多条消息,不会去考虑消息是否压缩

*/

public interface RecordBatch extends Iterable<Record>{

...

}

设置Broker端接收消息大小

修改broker端的能够接收的消息大小,须要在broker端server.properties文件中添加message.max.bytes=100000. 数值能够修改为本身想要的,单位是byte.

生产端消息大于broker会发生什么

若是生产者设置的消息发送大小为1MB,而broker端设置的消息大小为512KB会发生什么?

答案就是broker会拒绝该消息,生产者会返回一个RecordTooLargeException. 该消息是不会被消费者消费.提示的信息为: org.apache.kafka.common.errors.RecordTooLargeException: The request included a message larger than the max message size the server will accept.

消费者消息的限制

消费者也会进行消息限制,这里介绍有关三个限制消费的参数

- fetch.max.bytes 服务端消息合集(多条)能返回的大小

- fetch.min.bytes 服务端最小返回消息的大小

- fetch.max.wait.ms 最多等待时间

若是fetch.max.wait.ms设置的时间到达,即便能够返回的消息总大小没有知足fetch.min.bytes设置的值,也会进行返回.

fetch.max.bytes设置太小

若是fetch.max.bytes设置太小会发生什么? 会是不知足条件的数据一条都不返回吗? 咱们能够根据文档来查看一下.

The maximum amount of data the server should return for a fetch request. Records are fetched in batches by the consumer, and if the first record batch in the first non-empty partition of the fetch is larger than this value, the record batch will still be returned to ensure that the consumer can make progress.

英文的大意就是: fetch.max.bytes 表示服务端能返回消息的总大小. 消息是经过分批次返回给消费者. 若是在分区中的第一个消息批次大于这个值,那么该消息批次依然会返回给消费者,保证流程运行.

能够得出结论: 消费端的参数只会影响消息读取的大小.

实践fetch.max.bytes设置太小

properties.put(ConsumerConfig.FETCH_MAX_BYTES_CONFIG, 1024);

properties.put(ConsumerConfig.FETCH_MIN_BYTES_CONFIG, 1024);

properties.put(ConsumerConfig.FETCH_MAX_WAIT_MS_CONFIG, 1);

...

while (true) {

ConsumerRecords<String, String> records = kafkaConsumer.poll(Duration.ofSeconds(Integer.MAX_VALUE));

System.out.println(records.count());

}

启动消费者,添加上面三个参数. 指定消息批次最小最大返回的大小以及容许抓取最长的等待时间. 最后将返回的消息总数输出到标准输出.

实验结果: 由于每次发送的消息都要大于1024B,因此消费者每一个批次只能返回一条数据. 最终会输出1...

- 1. Kafka发送消息过长详解

- 2. 消息队列详解:ActiveMQ、RocketMQ、RabbitMQ、Kafka

- 3. Kafka 分布式消息系统详解

- 4. 消息队列kafka 消息队列kafka

- 5. Kafka | Kafka消息审计&消息轨迹

- 6. 了解消息组件Kafka

- 7. kafka丢消息

- 8. 【Kafka】kafka消息队列

- 9. Kafka 消息监控 - Kafka Eagle

- 10. Kafka消费者详解

- 更多相关文章...

- • HTTP 消息结构 - HTTP 教程

- • 免费ARP详解 - TCP/IP教程

- • Flink 数据传输及反压详解

- • 为了进字节跳动,我精选了29道Java经典算法题,带详细讲解

-

每一个你不满意的现在,都有一个你没有努力的曾经。

- 1. 跳槽面试的几个实用小技巧,不妨看看!

- 2. Mac实用技巧 |如何使用Mac系统中自带的预览工具将图片变成黑白色?

- 3. Mac实用技巧 |如何使用Mac系统中自带的预览工具将图片变成黑白色?

- 4. 如何使用Mac系统中自带的预览工具将图片变成黑白色?

- 5. Mac OS非兼容Windows软件运行解决方案——“以VMware & Microsoft Access为例“

- 6. 封装 pyinstaller -F -i b.ico excel.py

- 7. 数据库作业三ER图待完善

- 8. nvm安装使用低版本node.js(非命令安装)

- 9. 如何快速转换图片格式

- 10. 将表格内容分条转换为若干文档